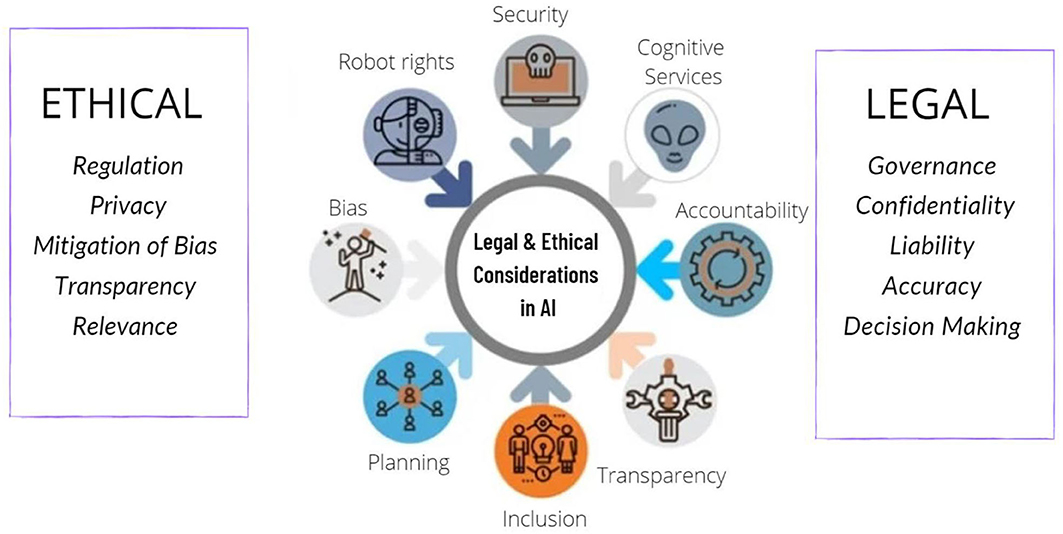

Artificial Intelligence (AI) is changing our world. It helps in many fields, from healthcare to education. But, as we develop AI, we must think about ethics. Ethics are rules about what is right and wrong. In this article, we will discuss ethical considerations in AI development.

1. Fairness

AI should be fair to everyone. It should not favor one group over another. This is called bias. Bias can happen if AI is trained on data that is not balanced. For example, if an AI is trained on data that mostly includes men, it might not work well for women. We need to make sure AI is fair for everyone.

| Problem | Solution |

|---|---|

| Bias in data | Use balanced data |

| Favoring one group | Check for fairness |

Credit: www.linkedin.com

2. Privacy

Privacy is very important. AI often uses personal data to learn and make decisions. This data can include names, addresses, and even health information. We need to make sure this data is safe. People should know how their data is being used. They should have control over it.

- Keep data safe

- Inform people about data use

- Give control to people

3. Transparency

Transparency means being open and clear. People should know how AI makes decisions. They should understand how AI works. This helps in building trust. If AI makes a mistake, people should know why. Transparency helps in fixing problems.

4. Responsibility

Who is responsible if AI makes a mistake? This is a big question. Developers should take responsibility for their AI. They should fix problems and make improvements. Responsibility also means thinking about the impact of AI. How will it affect people’s lives? What are the possible risks?

Credit: www.frontiersin.org

5. Accountability

Accountability is related to responsibility. It means being answerable for actions. If AI makes a wrong decision, someone should be answerable. This can be the developers or the companies using AI. Accountability helps in ensuring that AI is used correctly.

6. Safety

Safety is very important in AI development. AI should not harm people. Developers should test AI to make sure it is safe. They should think about all possible risks. Safety measures should be in place to prevent any harm.

7. Inclusiveness

AI should be inclusive. It should work well for everyone. This includes people of different ages, genders, and backgrounds. Inclusiveness makes AI more useful and fair. Developers should think about all kinds of users when creating AI.

8. Human Control

AI should not replace humans. It should help humans. People should have control over AI. They should be able to make the final decisions. This ensures that AI is used in the right way.

Frequently Asked Questions

What Is Ai Ethics?

AI ethics involves principles guiding the design and use of artificial intelligence to ensure fairness, transparency, and accountability.

Why Is Transparency Important In Ai?

Transparency in AI allows users to understand how decisions are made, fostering trust and accountability.

How Can Ai Bias Be Prevented?

Preventing AI bias requires diverse datasets, unbiased algorithms, and continuous monitoring to ensure fairness and accuracy.

What Are The Risks Of Unchecked Ai?

Unchecked AI can lead to privacy violations, job displacement, and biased decision-making, impacting society negatively.

Conclusion

Ethical considerations are very important in AI development. We need to make sure AI is fair, safe, and transparent. People should have control over their data. Developers should be responsible and accountable. By thinking about these ethical considerations, we can create AI that benefits everyone.

Key Points

- AI should be fair and unbiased.

- Privacy of data is crucial.

- Transparency helps in building trust.

- Developers should take responsibility.

- AI should be safe and inclusive.

- Humans should have control over AI.

By following these guidelines, we can develop ethical AI. This will help in creating a better and safer world for everyone.